Memory Management in Data Structures: A Beginner's Guide!

Part 17 of data structures series. Let's discover memory management critical role in Software Engineering.

In the world of software engineering, memory management is a critical skill. As programs grow in complexity, efficient memory use becomes essential for performance and stability. But for beginners in Software Engineering, understanding how memory is handled in different data structures can seem overwhelming. In this article, we'll break it down in a simple and approachable way so you can master memory management and avoid common pitfalls/mistakes.

🧠Understanding Memory: The Basics

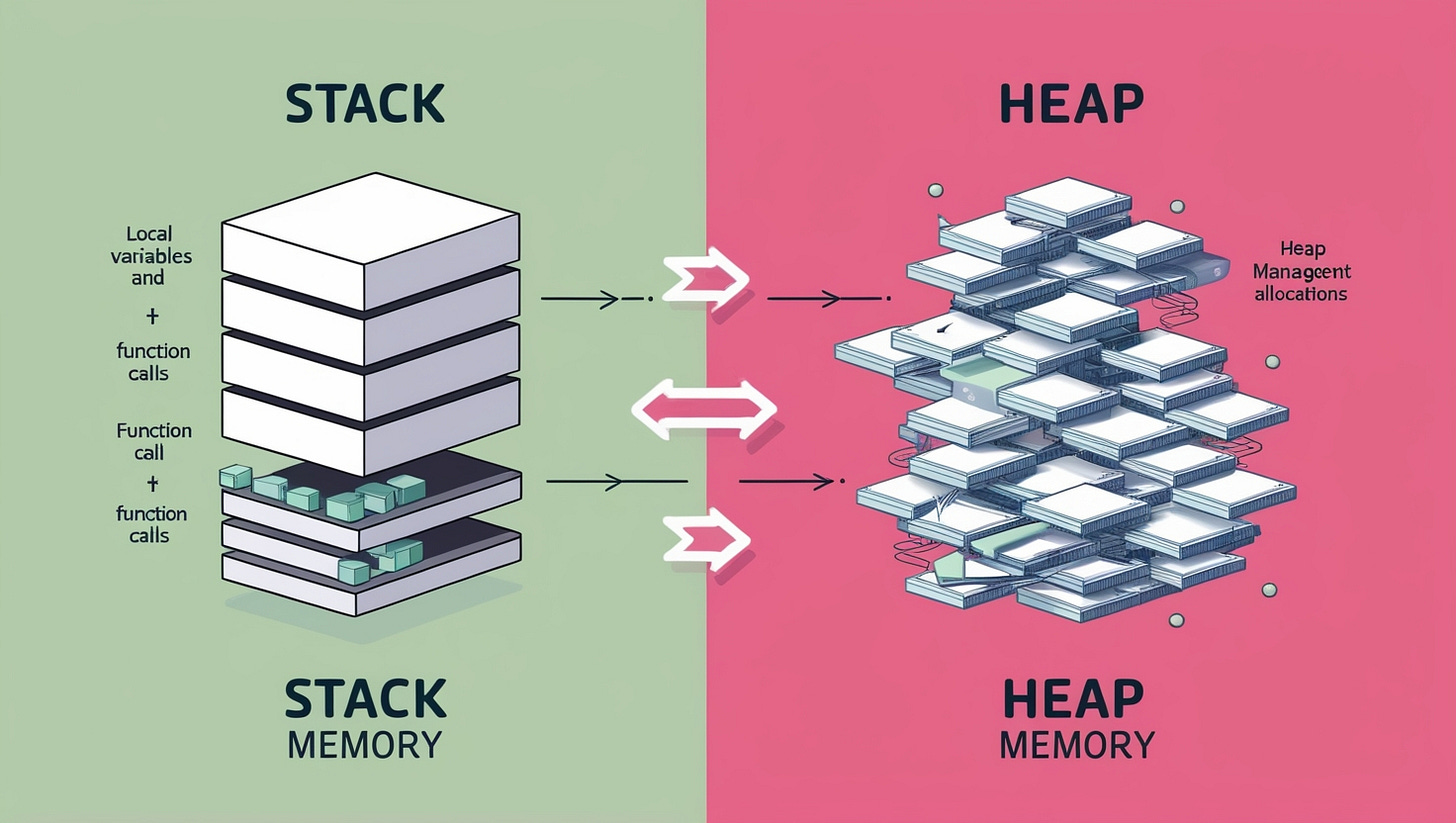

Before diving into specific data structures, it’s important to understand two fundamental concepts in memory management: the stack and the heap.

1. Stack Memory

The stack is used for static memory allocation. This is where variables created in functions (like local variables) are stored. Stack memory is fast because it follows a "Last In, First Out" (LIFO) principle. When a function is called, memory is allocated for its variables at the top of the stack. When the function finishes, that memory is automatically freed.

Advantages: Simple, fast, and efficient.

Limitations: Limited in size, and only works for data with a fixed lifetime (like function calls, for example).

2. Heap Memory

The heap is used for dynamic memory allocation, meaning you can request memory during runtime, and the size can vary. When you use dynamic data structures (e.g., linked lists or trees), memory is allocated from the heap. Unlike stack memory, heap memory must be manually freed when it’s no longer needed (in most programming languages🙂, I’m not talking here to “Rust” developers haha).

Advantages: Flexible, allows for dynamic memory allocation.

Limitations: Slower than stack memory, and improper handling can lead to memory leaks.

🔃Memory Management in Common Data Structures

Now that we’ve covered the basics, let’s explore how some of the most commonly used data structures manage memory.

1. Arrays

Arrays are one of the simplest data structures. When you create an array, you must define its size upfront. The memory is then allocated as a continuous block on the heap or stack, depending on whether it's dynamically or statically allocated.

Memory Use: Arrays are efficient in terms of memory access because of their continuous block of memory. This makes retrieving elements very fast (O(1) time complexity).

Limitations: Arrays have a fixed size. If you need more memory later, you’ll have to create a new, larger array and copy the elements over.

2. Linked Lists

Linked lists are dynamic structures where each element (called a "node") contains data and a reference (or pointer) to the next node. These nodes are allocated individually on the heap, meaning the list can grow or shrink during runtime.

Memory Use: Linked lists are flexible in memory allocation, as each node is allocated separately on the heap. You don't need to pre-define the size, like you do with arrays.

Challenges: Each node requires extra memory for the pointer to the next node, which can lead to overhead. Also, since you’re using heap memory, you need to ensure that memory is freed when nodes are removed to avoid memory leaks (Again, I’m not talking here to “Rust” developers haha).

3. Hash Tables

A hash table (or hash map) is a structure that uses a hash function to map keys to values. The memory is allocated for an array (or bucket) to store the data, and new memory can be dynamically allocated if the hash table needs to grow.

Memory Use: Hash tables use more memory because they may require extra space for handling collisions (situations where two keys generate the same hash). The hash table dynamically resizes, which means reallocating memory.

Challenges: Memory management in hash tables is typically handled automatically by resizing, but if you are manually managing a hash table, you must deallocate the memory for both the keys and values to avoid leaks (Again, I’m not talking here to you 😉).

4. Trees

Trees (such as binary trees) consist of nodes, where each node can have one or more children. Like linked lists, trees are dynamic data structures that grow or shrink as needed, with each node typically allocated from the heap.

Memory Use: Memory is allocated for each node on the heap, with each node containing data and pointers to its child nodes. The depth and structure of the tree can affect memory consumption, especially in unbalanced trees where one branch grows much deeper than others.

Challenges: Managing memory in trees requires careful consideration. When removing nodes, the memory allocated for both the node and its children should be properly deallocated to prevent leaks (Rust developers not concerned again, lol).

5. Stacks and Queues

Stacks and queues are linear data structures used for specific operations: stacks follow the "Last In, First Out" (LIFO) principle, while queues follow "First In, First Out" (FIFO). Both can be implemented using arrays (for fixed size) or linked lists (for dynamic size).

Memory Use: Arrays provide a simple implementation for stacks and queues, but have fixed sizes. Linked list implementations are more flexible and allow dynamic growth, allocating memory as needed on the heap.

Challenges: If you're using a dynamic approach, you'll need to manage memory by freeing up nodes when they’re no longer needed (I will not say it again, haha).

🚧Common Memory Management Issues

While using data structures, there are some common memory management problems you should be aware of.

1. Memory Leaks 💦

A memory leak occurs when your program allocates memory but never releases it, causing the program to use more memory over time until it eventually crashes. In languages with manual memory management (like C or C++), this often happens if you forget to free memory when it’s no longer needed.

PS: A simple solution is to use garbage collectors based languages, or just go for “RUST” and live a happy/horrible life, hahaha.

2. Dangling Pointers 👇👈👆

A dangling pointer refers to a situation where a pointer still refers to a memory location that has already been freed. This can lead to unpredictable behavior or crashes.

3. Fragmentation 🆓❌

Memory fragmentation occurs when there are many small, unused spaces between allocated memory blocks on the heap. Over time, fragmentation can make it difficult for your program to allocate large blocks of memory, leading to performance issues.

Best Practices for Managing Memory

Here are some best practices for managing memory when working with data structures:

Free Memory When Done: Always free dynamically allocated memory when you’re done with it, especially in languages that require manual memory management.

Use Smart Pointers (C++): If you’re working in C++, use smart pointers to automate memory management and avoid leaks.

Monitor Memory Usage: Use tools like memory profilers to monitor your program’s memory usage and detect leaks or inefficiencies.

Be Mindful of Data Structure Choice: Choose the data structure that best fits your memory needs. For example, if you need a fixed-size collection, use an array rather than a linked list.

PS: I have a beginner-friendly/funny series of articles about Data Structures from Arrays to Graphs! Check it out!

See you later guys 👋

Memory management is an essential aspect of working with data structures. Each structure has its own way of handling memory, whether it’s the static allocation of arrays or the dynamic allocation of linked lists and trees. Understanding how memory works in each data structure allows you to write more efficient code and avoid common issues like memory leaks or fragmentation. With practice and attention to detail, you’ll become a master of memory management, ensuring your programs run smoothly and efficiently! Bye!

🔔 Don't forget to subscribe to my blog and YouTube channel to stay updated with all the latest content!